Team Seven/Final Paper

Team pleit! competed in Maslab 2012 and won first place. All source code is available on Github.

Contents |

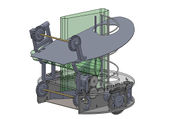

Robot

Mechanical design

Frame

Our robot was designed to have a circular frame with the drive wheels placed along the central axis. This allowed the robot to turn in-place without changing its external footprint, thus avoiding possible collisions with walls and other objects when turning. We also implemented a T-nut and slot design on all load-bearing structures (base plates and walls) to ensure their rigidity and that they assembled correctly at right angles.

Wheels

We designed our wheels with 44 spikes so they wouldn’t slip on the carpet and used a water jet to cut them out of 1/8-inch aluminum. They were originally 5 inches in diameter, but this proved too difficult for the motors. They were getting too much traction to reliably drive the robot; we over-corrected for slipping. The wheels were changed to 3.5 inches in diameter, and the spikes were rounded. We had no more problems.

Drive Motors

Our team acquired Faulhaber motors that, while smaller and weaker, were much lighter and had built-in quadrature encoders. We decided to make our initial designs with these drive motors in mind. We were not completely sure if they would be strong enough to drive the entire robot, but knowing Faulhaber made reliable, robust motors, we wanted to try them. These motors came with right-angle gearboxes, which we used since the direct output shaft was only about 5mm long. Unfortunately these gearboxes were the source of nonstop problems for the next two weeks (loosening set-screws, slipping gears). We finally decided to use the provided Trossen motors, re-designing the robot’s frame. (Actually the new design supports both the Faulhaber and Trossen motors.)

Ball-sucker

Rubber band rollers work great for sucking in balls, which is why most teams use them. We found our measurements for the dimensions of the roller and how high to place it off the ground through numerous tests.

Helix

To raise the captured balls to our storage area, we implemented a vertical helix (Archimedes screw) in the rear of the robot. We chose this over a four-bar linkage after identifying critical weaknesses of the linkage:

1. It was only able to hold a few balls at a time, and it seemed a real advantage to hold on to around 22 balls until the end of a match (especially when playing a strong opponent).

2. To score, all the balls had to be lifted together, requiring a lot of work in a short period of time.

3. It took up a lot of space at the bottom of the robot, forcing electronics to be mounted high up and fairly awkwardly.

The helix was a better choice for this application because it could lift multiple balls at once (in the final match, there was a moment it was lifting four balls at once). To construct the helix, we marked a piece of 2-inch outer-diameter PVC pipe with the desired pitch and then bent welding rod around it, following the markings. Supports were welded to the helix for rigidity.

Ball Rack

As the robot was becoming heavy, we constructed the top ball rack out of lightweight aluminum. The top rack was capable of storing 16 balls at once (more storage space was found in the lower level and in the helix, itself).

Ball-spitter

To launch the balls over to the opponent’s side, we installed a second rubber band roller at the top. This roller was driven by a high-speed motor capable of sending the balls well into the five-point region.

Electrical concerns

When designing our electrical system and interfaces, much attention was paid to the layout, wiring, and modularity of the hardware. The ability to quickly and effectively replace components without the need to desolder connections is extremely advantageous to rapid hardware development.

Arduino Protoboard

In order to keep things simple, we decided to populate the arduino protoboard shield in a sensible, neat manner. Every connection to the arduino was coupled by a male or female header connection. It probably would have been a good idea to standardize the polarity of the connections (male on board, female on peripherals or vice versa), but it wouldn't have made much of a difference. We mounted the qik motor controller vertically to save space, added two six-pin headers for modular motor connections with the possibilty of using the quadrature encoders on our fancy fauhaber motors, mounted all four IR headers in a sensible row using the +/gnd supply rails, and used the double-width header on the back of the arduino shield to arrange the two-pin headers connected to the bump-switches.

Perf-boarding is a bitch. Here are a few tips to keep things from getting out of hand:

- Don't be dumb when it comes to wiring. Signals traveling down wires are actually carried by the electromagnetic fields between the wire and ground (the ground plane, if there is one). Crossing wires over each other will cause cross-talk and capacitive coupling.

- Keep it neat: it'll be way easier to debug your circuits if you can easily see which wires go where

- Place components/headers sensibly. If you want to connect to a pin, put your component/header near that pin! If you have a huge header that just needs I/O connections, connect it to the nearest I/O's!

- Don't work when you don't have to. Use the protoboard's pre-routed connections (power rails, pin connections, etc) to make your life easier.

- Use both sides of the protoboard to help clean up your wiring mess.

- cut wires to length and pre-bend them to make your circuit neat, but don't over-do it.

Motors

The stock motors are pretty cruddy - power hungry - 12V gear-head motors. They consume about 1.5 Amps at stall torque, and aren't even very torquey. They're also electrically noisy in operation, so we recommend putting a 1uF non-electrolytic in parallel with the motor terminals.

Universal Waste used two stock motors for drive, one stock motor for the ball sucker, an additional stock motor for the helix, and a japanese high-speed geared motor for the ball spitter. All of the motors were connected to the arduino via male/female header pairs for quick detachment and modularity.

It would have been useful to have encoders on our drive motors, probably even on our helix and sucker motor as well.

Motor Drivers

The Pololu Qik motor drivers included in the kit are easy to use, but can get upset if you're not careful with them. When using the stock motors in conjunction with the qik controllers, we found that you had to enable low frequency PWM mode to reduce the power dissipation due to fast switching at high currents, otherwise the thermal protection circuitry would kick in and pulse our motors. The qik datasheet has information on this subject.

We decided not to use Qik motor drivers for all of our motors because PWM/digital control of our auxiliary motors was simpler and we didn't need bi-directionality. To drive our sucker, helix, and spitter, we used three N-channel mosfets (IR1407's) on the low-side of a motor, effectively switching the motor on and off. We chose 1407's because of their 4V maximum gate threshold voltage, which could easily be switched on by the arduino's 5V rail. We understand that the gate charge is a bit much for the arduino to handle at any fast rate, so we used a slow PWM frequency or no PWM at all. We had no trouble with heat dissipation from our fets, but there were some grounding/noise issues when it came to power routing.

Power Routing

When dealing with reasonably fast logic and reasonably high current it's import to not mess up ground, signal, and power routing. For example, when the source of our mosfets was tied to signal ground near the IR sensors, we had significant issues where the IR sensors would report lots of noisy measurements. The solution was to separate higher power carrying wires, including grounds and supply rails, from the low-power logic rails and signal carrying wires. We made the majority of grounds on our protoboard signal ground, then routed all of the power wires directly to our 12V header on the qik board. More information about power routing can be found by searching for 'star grounding'.

IR Sensors

We didn't do anything fancy with the IR sensors. We had two IR sensors on the side of our robot and two in the front. We did find that mounting the IR sensors vertically (so that they look like a colon : ) removed a lot of noise when trying to sense the distance of a wall or pillar at an angle. We'd encourage future teams to mount their IR sensors vertically.

We also placed a 1uF cap across the power rails for the IR sensors for decoupling, since we noticed that they pulled down the rail about 100mV every time they sampled (1KHz).

Bump Switches

Bump switches are easy - all you have to do is pull up (or down) one side of the switch with an appropriately sized resistor (1-10K) and determine whether the voltage across the resistor is high or low. We sprinkled bump sensors on Universal Waste in places where we felt it was too difficult to determine if we were stuck. We placed one additional bump sensor at the top of the helix, so we could count how many balls we had collected. In order to implement the ball collection sensor, we had to use an external interrupt that would trigger whenever the switch contacted. A passive RC filter was used to debounce the ball collection switch.

Instead of using external resistors to pull up or down our switches, we used the internal 10K pull-up resistors in the ardruino, and decided to use the adjacent pin to pull down the other side of the switch so that we didn't even have to solder in any headers. This configuration was neat and effective.

Battery

We used two 3-cell 11.1V LiPo batteries. We found that two batteries were able to support one full day of testing, and dramatically reduced the size and weight of our robot.

Arduino functionality

Our Arduino code went through many iterations throughout our design and build process. It was written in a mixture of standard Arduino functions along with many Atmel AVR C macros. We tried to use low-level hardware peripherals such as timers whenever we could on the ATMega2560.

Communication between the eeePC and the Arduino operated at a very fast baud rate of 500k. We designed an extensible command protocol, the implementation of which made it simple to add and remove commands as we iterated our design. The first byte received indicated the command being sent. The Arduino used a lookup table to know how many bytes of data to expect for that command, then sent that data to the appropriate function responder, which handles the command. The final list of Arduino commands that we used on Universal Waste was as follows:

- 0x00: ack: sends a response if the Arduino is alive

- 0x01: set_drive: commands the drive motors to be driven at a specific voltage

- 0x02: send_analog: request analog sensor readings (IR and battery voltage)

- 0x03: button_pressed: request the state of the start switch

- 0x04: get_bump: request the state of the bump sensors

- 0x05: set_motor: set the state of the auxillary motors

- 0x06: get_ball_cnt: get the number of balls obtained since the last time this command was called

- 0x07: blink_led: cause the "ready" LED on Universal Waste to start blinking

- 0x08: reset_qik: reset the qik controller

Initially, we were using motors that had built in encoders, so we designed the Arduino to do internal velocity feedback on the motors. This was done with a timed control loop and a very simple feedback structure that would simply increase motor output power if the period between encoder ticks was too long, and decrease it if the period was too short. This worked quite well, but due to mechanical power considerations, we ended up not using those motors. The final Arduino code contained no feedback, and ran the drive motors in open loop. Despite this, it still was able to drive very straight.

We also implemented motor slew rate limiting on the Arduino, to avoid sudden torques on the motors. When a motor drive command was issued with a large change in motor speed, the Arduino smoothed out that change over several hundred milliseconds, rather than sending it immediately. This was done using hardware timers on the Arduino.

We ran into many difficult to debug issues with the Arduino. One of the first problems that we found was that when certain auxiliary motors were on, we lost all communication with the Pololu qik motor driver. The problem was eventually tracked down to a short between the 12V ground and the Arduino ground on the shield — these grounds should be connected only internally on the qik. However, even after that fix, we still noticed occasionally communication problems between the Arduino and the qik or between the Arduino and the eeePC. These were never fully diagnosed, but we did take many steps to try and solve them, some of which seemed to make the problem less frequent. The pins driving the MOSFETs controlling the auxiliary motors were initially pins directly adjacent to the pins connected to the USARTs on the ATMega2560. Switching this control (which could be contaminated with motor noise) to pins farther away from the USARTs seemed to help. We also had consistently inconsistent problems with the eeePC dropping its connection to the Arduino. It remains unknown whether this was a hardware or software bug. It is possible that our responder pointer structure for handling Arduino commands accidentally set the ATmega2560's program counter to a strange memory location. It is also possible that there were lingering issues with motor noise contaminating the USART lines or the Arduino's power lines. In an attempt to ensure that the Arduino's power lines weren't contaminated by the IR sensors, we installed bypass caps on the IRs, but to no avail. This problem did occur during the final competition, but luckily only twice.

Vision code

The key to our successful vision code was its simplicity. As computer vision problems go, Maslab is on the easier side -- field elements are colored specifically to help robots detect them. In particular, red and yellow are very distinctive colors and easy for a computer to identify.

Design

The 640-by-480 color image from our Kinect was downsampled to 160-by-120. In order to minimize noise, we downsampled using area averaging rather than nearest-neighbor interpolation. The 160-by-120 image was then converted to the HSV color space in order to facilitate processing using distinct hue and lumosity information.

Pixels in the HSV image were then classified as red, yellow, or other. We found that the best metric for color recognition was distance in HSV space (sum of squares of component errors) rather than hue threshholding.

Flood fill was then used to identify all connected components of each color. For each connected component, we computed the average and variance of all x coordinates, the average and variance of all y coordinates, and the total number of pixels.

When navigating to a ball, we simply used the coordinates of the largest red blob in the frame. Similary, when navigating to a wall, we simply used the coordinates of the largest yellow blob in the frame. We did not attempt to track changes in the location of objects from frame to frame -- we just used the largest object in each frame.

We used a simple proportional controller to drive to target objects using vision data. This was necessary because our drive motors used open-loop control.

Implementation

The bulk of our vision code was written in pure Python, with the most CPU-intensive parts compiled using Cython. We had fewer than 200 lines of vision code overall. The image resizing and color space conversion used OpenCV, but all other code (including flood fill) was custom.

We wrote a GUI app with sliders to set color detection constants that displayed an image showing objects that had been recognized. We anticipated adjusting these constants before every match -- however, our color detection proved robust enough that we did not adjust color paramters at all after the second week.

We also wrote a speed tester that automatically profiled the code to help us find and eliminate bottlenecks.

Performance

The first iteration of our vision code ran at 10 frames per second. Using the Python profiler to determine which statements were bottlenecks, we increased it to 100 frames per second by the second week. Since the camera only provided 30 frames per second, we had plenty of time between frames to do other processing. This eliminated the need to use multiple threads of execution.

The most expensive part of our vision code was downsampling the .3 megapixel image to .01 megapixels. After that, everything else was very quick.

From a programming standpoint, it was very convenient to do everything in a single thread: get image, process image, update state machine, send new drive commands, and repeat.

Effectiveness

Our vision code was extremely effective at detecting red and yellow objects. We had very few false negatives and no false positives. Green detection was easy as well, but we got rid of it since we didn't use green object information at all. It was difficult to distinguish the carpet, blue tops of walls, and white walls.

Rather than implementing blue line filtering, we mounted our camera so that the top of its field of view was perfectly horizontal and 5 inches above the floor. It was physically unable to see over walls. In this configuration, it was not necessary to identify blue or white.

We were one of the teams using a Kinect for vision. We did not end up using the depth data at all, since it was only reliable for objects two feet or more from the robot. However, we still feel that it was advantageous to use the Kinect, since its regular camera (designed for video games) had better color and white balance properties than the cheap Logitech webcam.

The basic idea of our navigation code is very simple: If the robot sees a red ball, it should try to possess it. If the robot sees no red balls, it should find a wall and follow it until another red ball appears in sight. Near the end of the game, find the yellow wall and approach it. Then, just before the 180 seconds are up, dump all possessed balls and then turn off all motors.

We originally started with closed loop control, so the code would issue commands such as "Drive 22 inches to reach the ball." However, we had issues with this at the Arduino level and eventually had to switch to open loop control. With open loop control, our commands look more like "The ball is far in front of us, so drive forward quickly."

We figured the best model for navigation would be to send a command to the motors every time we process a new frame from the Kinect. To accomplish this, we wrote a state machine that combines the current state of the robot with sensory inputs to obtain an action (such as drive commands to the motors) and a state transition.

However, from a given state, there should be multiple valid transitions. For example, pretend the robot has a ball in sight and is driving towards it. The typical transition edge should be to stay in the "drive to ball" state, but if a front bump sensor is triggered, the robot is probably stuck on a pillar so it should instead transition to a state that tries to free the robot by backing up.

So, before transitioning states, we first check if the robot is stuck. If it's not, we then (if the time is right) check to see if the yellow wall is in sight. Continuing on, we next check if the robot can see any red balls. And then, if none of those apply, the robot takes the default transition edge. This approach seemed risky because it means that seeing red for just a single frame can dramatically alter the state of the robot, but our vision code was robust enough that this proved to be a non-issue.

What was an issue, though, is that there are still other ways to get stuck in a state. If for some reason the robot catches on a pillar without triggering an IR or bump sensor, it could stay in the "drive to ball" state indefinitely! To prevent this, we set a timeout for each state.

States

Here's a high-level overview of the states from our state machine, with descriptions of all default transition edges plus a few other important edges.

- LookAround – Rotate the robot in place, looking for (if applicable) red balls or yellow walls. After a couple of seconds (which we timed to be just longer than a full revolution), enter GoToWall.

- GoToWall – Drive until the robot hits a wall, and then enter FollowWall.

- FollowWall – Using a PDD controller, maintain a constant distance between the right side of the robot and the wall (as measured by an IR sensor). Periodically enter LookAway to look for red balls or yellow walls. (It's worth noting that we made our code very flexible so we could, for instance, override stick detection in FollowWall mode to ignore the right bump sensor; without this modification, we ended up accidentally triggering stick detection at every inside corner.)

- LookAway – Turn away from the wall by half a revolution (as determined by IR) and then turn back. If applicable, look for red balls or yellow walls while turning away. Then return to FollowWall.

- GoToBall – Using a P controller to keep the ball centered, drive towards a ball. When the ball is sufficiently high in the image (meaning the robot is close to it), enter SnarfBall.

- SnarfBall – Drive forward (with the front roller on) in an attempt to pick up a ball.

- DriveBlind – The idea behind this state is that we don't want to enter LookAround if we lose a ball for a single frame. When the robot is in GoToBall and loses the ball, it will enter DriveBlind and keep driving for a couple of frames before giving up.

- GoToYellow – Using a P controller to keep the yellow wall centered, drive towards it. When the wall is close enough (measured by both front IRs reading a small distance, and the yellow blob in the camera being sufficiently large), enter DumpBalls.

- DumpBalls – This is a slight misnomer because it doesn't actually dump. Instead, this state either proceeds to ConfirmLinedUp (if the robot isn't happy about where the yellow is) or WaitInSilence (if the robot is).

- ConfirmLinedUp – Back up a bit, then try GoToYellow again.

- WaitInSilence – Turn off everything (mainly to save battery power, but it has the side effect of suspensefully appearing as if our robot has died) and wait until 3 seconds before endgame, then HappyDance.

- HappyDance – Turn on the roller on the third level, and rapidly shake side-to-side to eject all the balls.

- Unstick – Figure out which IR or bump sensor indicates that the robot is stuck, and then move in the opposite direction (plus some random angle offset) in an attempt to unstick.

- HerpDerp – Drive backwards in a random S-shaped curve.

Other details

To explain language like "If applicable, look for red balls or yellow walls," we need to dip a little into our robot's strategy. At the beginning, the robot should look for red balls and should ignore yellow walls. When there are 100 seconds to go, a flag is raised and the robot starts looking for yellow walls. If it sees a yellow wall, it reacts by "stalking" the wall. This means that the robot will still go for balls, but it will not follow walls out of fear of losing the yellow wall. (If however going for balls accidentally causes the robot to lose sight of yellow, it will temporarily ignore red balls and re-enable wall following until it finds yellow again.) Then, when there are only 30 seconds to go, the robot stops looking for red balls and focuses exclusively on approaching the yellow wall.

The theory behind the yellow stalking is that, while 30 seconds should be enough to find yellow no matter where the robot is, it's just a good idea not to lose sight of yellow just in case the robot gets stuck elsewhere on the field. We'd be happier dumping the balls already collected than spending more time picking up a few more. In practice, this also worked to our advantage because it meant that we were in a prime position to scoop up and return balls dumped by our opponent near the yellow wall. (We were the only team with the strategy of holding onto balls until the very end.)

One failure mode we noticed in our design was that, if a ball wedged itself into an inaccessible corner, we might spend arbitrarily long trying to snarf it. A timeout in a single state would not help because, in this example, the robot continually transitions between Unstick and GoToBall. To prevent this, we incremented a counter each time we entered Unstick and would temporarily ignore balls when this counter exceeded a given threshold. (Successfully snarfing a ball, as measured by the sensor on our third level, would reset the counter.)

That's about the entirety of our code, in a nutshell. There are a few other minor details (for example, we disabled our helix if we detected that the third level was full, and we pulsed the helix every couple of seconds to keep it from jamming), but those were mainly specific to the robot we built. In terms of applying a strategy to robots in general, the state machine architecture described above is probably more helpful.