Team Six/Final Paper

Our code and designs can be found at http://code.google.com/p/maslab-team-6.

Contents |

Overview

Yo dawg, I heard you liked state machines...

At the beginning of our four weeks, we had high goals. We wanted to build a sturdy robot which would collect balls and shoot over the walls. Over the course of MASLAB, we realized that some of our expectations were slightly too high. We didn’t finish our mechanical robot until late enough that we did not get to test all of our software. This was problematic for all of the mock competitions (though, by running and testing multiple times, we were able each time to make the robot have most of our intended functionality) and for the final competition. Our best showing was at seeding where we gave ourselves the time to debug our software after temporarily ceasing work on mechanics.

Initially, our goal was to start by collecting the red balls and then, either when the robot was full or 30 seconds before the end of the match, whichever came first, to start scoring. In terms of our high-level behavioral programming, we intended to have a system of state machines within state machines. At the upper level, there would be three main states: Collect, Score, and Escape. In Collect state, the robot would focus on gathering red balls, with an internal state machine to determine what methods to use (wander, wall following, tracking a particular ball, etc). In the Score state, the robot would focus on finding the yellow wall and then shooting the balls over it. The robot would enter Escape state if it became stuck. However, due to time constraints, not all of this overall strategy wound up implemented.

Mechanical Design

A lesson in repetition.

Our design centered around a large Ferris wheel, which was supposed to roll the balls (collected via a hyperboloid rubber band roller) up to the top of the bot, where a shooter would toss them over the wall. Our shooter was supposed to consist of two wheels spinning in opposite directions, and would ideally have sent all the collected balls into the 5-point zone on the other side of the field. After assembling the shooter, we quickly discovered that even a drive motor did not have enough torque to run the shooter at a gear ratio that would allow the red balls to have an adequate escape velocity, and we fell back on a design which was supposed to use the Ferris to simply boost the balls onto a track that dropped them over the wall. Our problems with the shooter were not exactly unprecedented or unexpected. Team 6's mechanical and electrical design was somewhat complicated by our lack of experience. No one on our team intends to be Course 2 and none of us have taken any mechanical engineering classes. However, Bianca had previous experience with FIRST and outside projects, Will had done some previous woodworking and electronics projects in high-school, and Tej had some previous CNC and digital electronics experience in high school.

Fortunately, CAD makes designing (at the very least plausible) mechanical structures very easy, and laser cutting and water jetting take a lot of pressure off of the machining side of things. While we did have to modify several pieces, and make several others by hand, almost all of our pieces were laser cut. CAD and laser cutting also allowed for revisions to be made to individual parts and systems very nicely. For instance, we tended to get parts cut first, then revise them in CAD as we discovered flaws such as slightly misplaced mounting holes or interfaces we hadn't properly thought out yet. Once we were satisfied that we'd revised all the known flaws in the design, we'd get a final part cut to replace the draft part, which often had seen a lot of wear (and drilling).

The high accuracy of the laser cutter also allowed us to use a system of tabs and mounting hole to tie together, rather than having to rely on L-brackets or tapped holes to position parts. This afforded us a great degree of accuracy and made assembly a very easy task. (It's worth noting that we purchased about $10 worth of 6-32 x 1" from the Home Depot hardware to assist us in assembly, as certain parts in MASLAB's official 6-32 hardware selection were somewhat sparse from day to day.) Despite the advantages afforded to us by CAD, however, we made some relatively major mistakes that future teams would do well not to repeat. First, we left the mounting and placement of electronics and other minor parts until very late in the mechanical design, which came to require us to mount the electronics and one belt is such a way that our collision boundaries were no longer circular in what turned out to be more important than we had anticipated. Second, we failed to maintain good modularity as our mechanical design became more complex. Our wheels, for instance were mounted beneath two stages added for electronics. On the night of impounding, the set screws of the right wheel loosened, causing it not to spin properly. It proved somewhat difficult to remove and repair the wheel, and took far more time than would have been ideal, given our already tight time constraints. Additionally, the day of the competition, we noticed that one of our wheels was rubbing on the side of the laser cut wheel space.

Given some previous problems with the drive motors, and their extreme importance to it's proper function, we would have been wise to make sure that we could immediately get to the wheel and motor. Perhaps the easiest way to do this would have been to ensure that the entire left and right wheel complexes could be detached and removed via the loosening of only a few accessible screws. Having our design be more modular would also have allowed us to make spare modules for such important parts as the wheels or front roller.

Overall, however, we determined that laser cut pieces were super useful. Having mechanics up and working earlier would have been more useful. It would have been helpful for the programmers to have a robot to test on during the build season. Ways we could have dealt with this was leaving them a working robot base, either our pegbot or one of our earlier mockups. This would have required using extra motors, sensors, etc. In terms of electronics, we didn’t have any major issues. We blew two motor controllers when we accidentally plugged in motor power in the wrong location on the boards, and we had some transient problems with the Arduino just before impounding, but this was likely due to metal shavings falling on the board. Other than that, the only other electrical issue we had was when sending signals to our stepper driver and realized that we hadn’t specified that our output pin was an output (high-impedance) line. In hindsight, the issues we had running two motor controllers at once (sometimes, they randomly crashed) could have been caused by this same output pin issue.

Programming

More code than your robot has room for.

The biggest guiding principle in our programming plan was modularity. Modularity is important in general, but even more so in a group project, because this meant that work could be divided. We came up with the concept of modules that can be stepped with some input, and which run in their own processes. For lack of a better term we called this type of module a “blargh”. These blarghs could then be hooked together via pipes, to create a flow of information. The multiprocessing was particularly useful to keep the vision module separate from the rest of the code, since it was so processor intensive, and multi-processing allowed it to make use of multiple cores. We also focused on keeping our code clean and commented so that our other two team members could jump into the code at any time and make changes.

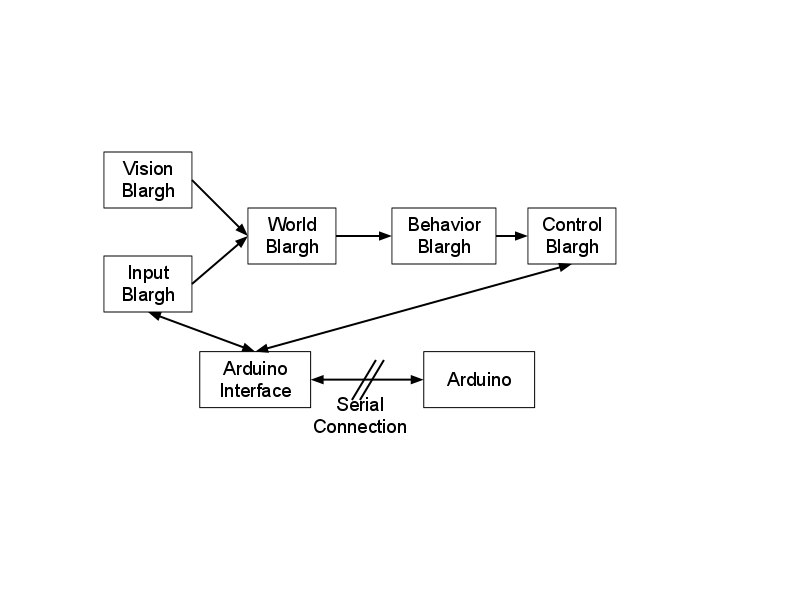

You can see our structure for the blargh modules and their linkages below.

The input comes in through the InputBlargh and the VisionBlargh. The outputs from these go into the WorldBlargh, which aggregates the data and creates an object that represents the state of the world. This is then passed to BehaviorBlargh, which uses it to update the state and generate some output. The output then goes to the ControlBlargh which applies PID control to move to the goal location. The interaction with Arduino was a little bit special; the Arduino code ran in it’s own process but wasn’t a blargh. In order to handle this, we implemented an interface which kept track of a variable number of pipes and waited for input. The input it received told it what to do - poll a sensor, or send a command to an actuator. We also ended up rewriting the code that interfaced with the Arduino, because there were speed issues. When creating the blarghs, we passed to the constructor a pipe to the Arduino wrapped in a class for interfacing. In the above diagram, you can see the Arduino interface connected by lines to the blarghs that made use of it (InputBlargh and ControlBlargh).

For our BehaviorBlargh, we decided that a state machine was the best way to go. We adopted Will’s method of having the states be separate classes, with each state defining a step method that returns a new state and an output. This once again capitalized on the idea of modularity, so that we could look at each state by itself and not worry about how the other states worked. The state machine definition itself was also simplified this way, because it all it had to do was create the initial state object and then step whichever current state object whenever it was stepped. One disadvantage of this was that we couldn’t figure out how to divide the classes into multiple files in such a way as to avoid circular imports and thus ended up making all of the state classes top-level and in one file. This meant that looking through and finding the correct class took some time. In hindsight, we should have devoted a little bit more time to figuring out a good way to split the files, because it would have saved us much more time in the long run.

As with everything, our behavior strategy was focused on modularity. We originally intended to have state machines within state machines, with the top level state machines very simply broken up into three states “Collect”, “Score”, and “Escape”. Below these, we broke our state machines up into various smaller states and/or states that were themselves state machines. For example, we broke down “Collect” into “FindBallState” and “BallAcquisitionState”, which themselves were broken down into states.

We tried to design our behavior in such a way that our robot should never get stuck by using stuck detection transition into EscapeState, and using timeouts in case for some reason it did get stuck. Unfortunately, due to lack of testing at the very end, our code had a messed up timeout in one of the states, which combined with our bump sensor falling off led to our robot getting stuck in the finals.

As with everything, our behavior strategy was focused on modularity. We originally intended to have state machines within state machines, with the top level state machines very simply broken up into three states “Collect”, “Score”, and “Escape”. Below these, we broke our state machines up into various smaller states and/or states that were themselves state machines. For example, we broke down “Collect” into “FindBallState” and “BallAcquisitionState”, which themselves were broken down into states.

We tried to design our behavior in such a way that our robot should never get stuck by using stuck detection transition into EscapeState, and using timeouts in case for some reason it did get stuck. Unfortunately, due to lack of testing at the very end, our code had a messed up timeout in one of the states, which combined with our bump sensor falling off led to our robot getting stuck in the finals.

Vision

“Does it work?”

“Yes”

“Have you tested it?”

“Why would I do that?”

Our vision code for the robot was written purely in C++, and integrated with python via ctypes. Ctypes is a forgein language library for python that is used to manipulate C data types and call C functions in shared libraries or DLLs. The code was written in C++ due to two reasons, speed and documentation. C++ code often runs several orders of magnitude faster than corresponding Python code, as it is a compiled language as opposed to Python which is interpreted. The multi-language library we were using for vision, OpenCV, also had much worse documentation for Python than it did for C++. Occasionally the python documentation would be incorrect, in one case it was even written for the C++ side, and often information was missing.

To identify balls, the vision code converted the RGB data that the camera fed to us and converted it to HSV. HSV is preferred when filtering an image for vision processing because the hue and saturation values are in theory unaffected by lighting conditions, assuming white light. OpenCV provides a function, cvtColor(...), that will take an RGB image and convert it to an HSV image, but during testing we found that this function was quite slow and was taking up the majority of our processing time. To speed up this section of the code, we pre-computed all possible combinations of RGB and mapped them to their respective HSV color to create a 256*256*256*3 byte size file. When we ran our program on the eeePC, we loaded this array at the start and referenced it to do the conversion from RGB to HSV. This decreased the time required to convert by a significant amount.

To detect the balls, we filtered based on hue and saturation in the final version. Another technique that we found to work very well but did not end up going with to increase speed was normalizing value in an HSV image and then converting back to an RGB image and filtering based on amount of red. We also experimented with various methods of smoothing and averaging over multiple frames to decrease noise, but with better HSV conversion and downscaling we found that this was not necessary. To identify the balls we filtered based on a range of hue and saturation for red. This method worked very well, and we were able to identify the color of red balls nearly all of the time if they were within a reasonable distance.

The last piece in finding the balls was to do a ellipse fit on the red blobs that we found. If there were balls that were right next to each other, we treated them as one ball, and just used the smaller radius of the ellipse for estimating a circle. This worked because if we centered our robot on the balls, we didn’t care about the exact distance or location of the balls, we simply needed an approximate location.

Lastly, to increase speed we halved the resolution of the image that we received. This was done to increase the speed at which we processed the images. The disadvantage of this was that we decreased the distance at which we could identify balls, but since our camera was tilted at a downward angle, this was not too big of a concern.

Code was also written to identify walls by filtering for the blue tape on top of all walls. This data was used to eliminate all image above the wall since we didn’t want to identify “balls” outside of the play area. The filtering worked similar to how we found red balls with the exception of fitting ellipses to the filtered image. We also had code to identify yellow walls by filtering for yellow, but this was not used in the final version due to time constraints.

The reason our robot didn’t win was because all of our mechanical engineering sucked, the programming of this robot was actually godly and should be worshipped for all time.

/* Except for the part where the timeouts were commented out. Oh, and the robot couldn’t see the wall in front of it, despite the fact it was seeing nothing else. And all the python had semicolons peppered randomly around for readability. But otherwise, it was totally hardware's fault that the failure of a single bump sensor meant that the robot ground to a halt. #gracefuldegredationFTW*/ COMMENTED

Notice how software likes to comment out the most important parts. This also happened in competition. #Provingmypoint