Team Twelve/Final Paper

Despite our initial MechE ignorance, we Nomkeys made it through Maslab with a robot who scored in the final competition. Our success would be impossible without the hard work of every one of our teammates:

- Roxana and Melody planned and built the robot's body. They crash-coursed themselves on designing and machining: Melody CAD'ed all the parts with the design of the robot, Roxana and Melody made numerous trips to Edgarton and CSAIL to build the brackets, screw, hopper, bearings, ramp, and other attachments, and laser cut the three layers. Together they assembled and reassembled the robot many times.

- Holden gave the robot brains. He programmed and tested the state machine that tells the robot to explore, go after balls, and score.

- David gave the robot sight. He programmed the robot to recognize yummy round red and green things, the blue tape marking the boundaries of its world, and the yellow walls and purple pyramid.

And we got to know each other very well:

- Melody kept us all organized with her checklists and, by continuous questioning, made sure nothing would go wrong.

- Roxana saved us by staying sane and jumping to action when things were falling apart during pineapple time.

- Holden figured out the simplest and most efficient solution. Every time.

- David "entertained" us with his pessimistic "humor" and stayed cool while coding under pressure.

Contents |

Overall Strategy

We split the 4-person team into 2 for coding and 2 for building. We talked and spent time working together often, so neither part of us got sudden lofty goals of what the others could or could not do.

Ball-Scoring

At first our goal was to score balls in the pyramid. We decided to collect as many balls as we could within a certain time period, rather than a certain number of balls. Since our robot was to be round and stable, building a tall scoring mechanism seemed feasible.

One interesting thing to note: balls thrown over the perimeter of the field did not count as negative points. It would be unfortunate, however, if we threw balls over some wall that was still on our side of the field. In the end, throwing the balls over any wall would have probably been advantageous.

Scoring the balls in any manner (rather than only possessing them, which was 5 points) would get at least 10 more points per ball. Therefore, the aim of the coding rush right before the competition was to get the balls over a yellow wall or over a purple wall. Our robot's helix and hopper were not tall enough to score anywhere else by the final few days. If we made it taller, balance would have been extra mechanical hassle.

Mechanical design and sensors (Roxana and Melody)

Ball Possession: Essential Components

Last year, the rubber band roller, though not common among the teams, showed quick and easy success. We made a completely functional one for our Peggy (pegbot), and won Mock 1 by being excellent at collecting balls. We used a kit motor with a crude but quick motor mount to secure the roller to Peggy's wooden walls.

- Rubber band roller - We CAD'ed the wheels for laser cutting out of acrylic. The staff had a helpful SLDWRKS file that we altered slightly (by making the ridges deeper to be able to use thicker rubber bands). To keep pressure on the wheels and counteract the force of the rubber bands, we slid a keyed rod through the centerholes, and attached shaft collars with set screws to the rubber band wheels. Quick note of advice: Everything attached to poles must be secured. We ended up keying the rod to make one side flat and then attaching parts with set screws. This is pretty easy to make at Edgerton.

- Ramp - A ramp along the bottom layer of our robot directed balls into the mouth of the helix. Pieces of cardboard on the ramp acted as walls. They started at the bottom of the rubber band roller and angled upwards in a semicircular arc to the maximum height that balls could go up. It then slanted downwards slowly to give the ball some momentum to end up in the perfect pick-up spot for the helix.

- Robot design - All of our robot prototypes had very low robot bases, only .75" off the ground or so. This way, we did not need the ramp to collect balls (note: not bring them to the hopper, just collect them), and this way we won Seeding. At some point, Hermes ate 6 balls and kept them in "herm" stomach. Goes to show: crude ball collection, paired with dependable code, can get you an easy win before the final competition.

Upwards Transport in the Robot: the Archimedes Screw

We understood that we need an internal upwards-carrying mechanism in the robot to score. The lowest scoring point was 6", so we needed a mechanism to move a ball up at least that high.

- 1st idea: Pulley + Conveyer Belt - At first, we wanted to make some simple conveyor belt with sturdy metal parts which could figuratively "scrape" along the bottom of the ramp and draw up balls. We were quickly dissuaded by the staff, who told us that the winning design from last year was an Archimedes Screw.

- Final design: Archimedes Screw - We used a lot of different wires for making the screw (given to us by kind people at Central Machine Shop. Roxana is good at making friends.). We tried different widths of aluminum welding rod but they were too malleable, and we eventually settled on steel welding rod, recommended to us by someone at Edgerton. We had a lot of problems with making the ramp and screw work together (having the ramp high enough so that the screw was low enough to pick up the balls). This was compounded by the fact that the helix's motor needed to be placed in the center of the helix and mounted at a certain height. This meant that we couldn't easily secure the helix, and at the beginning, the balls bent the helix repeatedly.

Robot Motion: Trying out Gears, Not using them

Don't use gears unless you know how to!! At first, we had a pretty simple yet sweet gear setup on our second acrylic prototype. Thanks to Mark and a beautiful lathe machine, we made a brass shaft to insert into the walls to let the middle gear spin freely. Gear count: a small bevel blue-threaded on a kit motor coupler, at 90 degrees with a freely spinning bevel on the wall, sort of randomly placed. This was in line with a white gear (provided) adapted with a set screw fit onto a .25" keyed shaft that also attached to the blue rubber wheels provided.

In the end, we dropped the gears because of stripping bevels, and the tiny bevel slowly falling off the motor shaft coupler. We remade our lower walls to accommodate large motors for high torque for the wheels. This caused "running into walls with happy abandon" to become a dangerous occurrence. Some advice: don't use gears unless you know the math behind them.

We also had a caster we randomly found in Pappalardo lab scrounging for cotter pins, and it was a very good size for the back of the robot, however keeping it tilted forward slightly. We figured the center of mass of the robot was the 12V battery and the laptop battery, which would be placed somewhere along the back of the robot anyway, so we used it in the back.

In the end, we put our motors right next to our wheels, which cut in, a bit, to our ball collecting ramp. However, we raised the ramp an inch above the motors and had a good centering mechanisms to bring our balls in.

Ball Storage and Scoring

The helix transported the balls up to the top (4th) layer, which was made out of thin sheet metal and could store around 9 balls (though we never collected that many.) We constructed the top layer out of material lighter than acrylic to minimize the weight of the entire robot. At the end, we were lucky and the robot was well-balanced, though it tended to tip forward slightly. Due to poor planning, we ended up constructing the entire 4th layer on the last two days before the final competition, but that ended up working well for us. For the scoring mechanism, we used a servo and a metal latch. The servo opened the metal latch, releasing the balls to go over the yellow wall or the lower level of the pyramid, depending on which one it found first. We also planned to put a ramp that could be lowered and score on the second level of the pyramid, but because the robot was too short, this was never implemented.

Sensors: On How many, Placement, and Redoing the EE way too many times

Our original pegbot had just a webcam for ball detection and all the necessary motions, winning us Mock 1.

Our first laser-cut prototype had two IR sensors facing to the front, and only helped with moving away from walls. Our final prototype contained short and long range IRs each in the front, and two short range IR sensors pointing to the left and the right to help with "am i stuck?" detection as well as helping to find the most open space for the robot to move towards.

Our wiring was pretty much a mess until about the last weekend before the final. Mounting and screwing things down as soon as the best possible prototype is done was an extremely good idea, modularizing the arduino board, the motor controller board, and all the various 5V regulator and the 5V source of power. Keeping them together with short-as-possible wires, letting wires touched a taped-down screw in a plastic layer, and shaping wires into 90 degree angles around the back and second layer of the robot.

Overall Robot Design and Process

LAYERS:

- BOTTOM LAYER - low robot body, close to the ground to make collection of the balls easier. This layer would have all the driving motors and ball collection mechanism

- SECOND LAYER - electronics layer.

- THIRD LAYER - computer layer

- TOP LAYER - ball storage

We redesigned our robot about 3-4 times and did not finalize our strategy or robot until the day before the competition, which was a big mistake. Future advice: try to finalize your design as early as possible. You'll have to make changes regardless, but at least you won't have to re-lasercut everything because of drastic changes in your design.

We CAD'ed and lasercut everything early in the second week, approximately a day before the second mock competition. (This was very rushed, as we had to quickly learn how to CAD, tentatively finalize our design, etc.). The design did not work because of the gears - though Roxana worked her hardest with Edgerton people to make the gears effective and efficient, we didn't know enough of the math behind it or have enough precision to make them functional. Thus, after making numerous holes in the walls for gears and then realizing that it was a lost cause, we redesigned and re-lasercut the entire robot.

This time, we included higher walls on the bottom layer to have an elevated ramp, included holes for securing, a larger base for the robot, and modified holes for the Archimedes screw. This became our final design. In between these two designs, we also managed to break the acrylic of the bottom layer. Panic! But someone else lasercut the part again.

Software design

All our code is available on the github repository [1]. We rely on the staff code for the arduino firmware. Here is an overview:

- main.py: starts the state machine.

- actions.py: defines the basic actions the robot can take, such as moving forwards, turning left, or releasing balls.

- states.py: defines all possible states the robot can be in. (See the next section for an explanation.)

- wrapper.py: a class that encompasses all current information the robot has. It provides communication with the arduino, so for instance has all the sensor readings; it logs the current time.

- VisionSystem.py: processes and filters images, finding locations of targets and passes them to wrapper.

- constants.py: defines constants such as motor speeds. Putting all the constants in a separate file was useful when we wanted to change/calibrate on the fly.

- mapper.py: outputs a local map given input from the camera. We did not use this in the final competition because we did not have time to get it working.

(The other files are various tests, and are unimportant.)

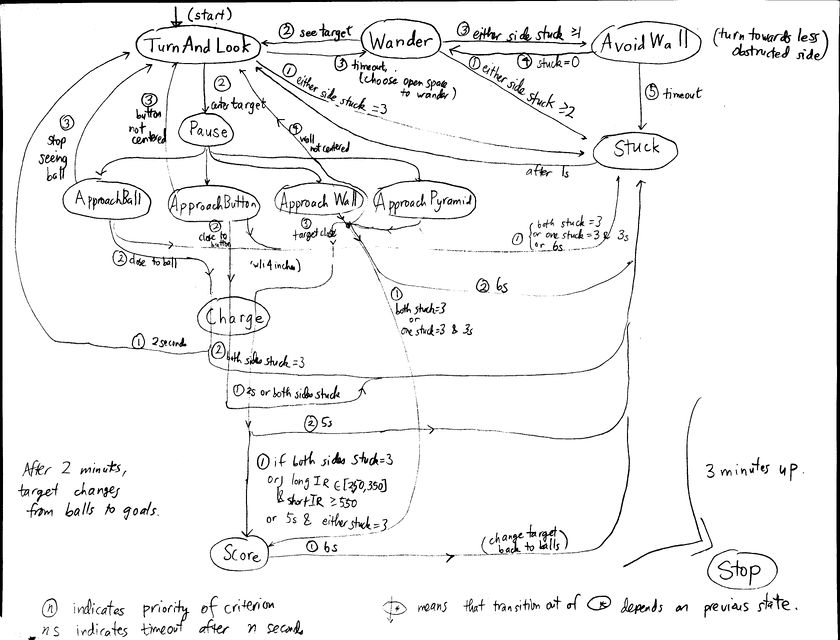

State Machine (Holden)

I decided to program Hermes's brain as a state machine. This means that at every instance in time (s)he has a well-defined state such as Wander, ApproachBall, or Score, and that she transitions to the next state based on his/her sensor readings (does (s)he see a ball? is the wall close?).

I used a state machine because

- it was simple, and

- it worked.

Though a state machine is conceptually simple, it had served many winning teams in the past. The easy part is creating the state machine architecture and defining the states. The hard part is determining the right transitions between the states: after what time should Hermes give up on a ball? Under what combination of sensor readings should Hermes realize that (s)he is stuck? To program the right transitions I had to go back and forth many times between testing and programming, because it is impossible to forsee all the circumstances the robot can be caught in.

Implementation Details

I defined a superclass called State. Each State has

- an Action associated with it,

- and a "stopfunction."

Furthermore, each State passed a Wrapper object to the next state.

The idea was that States represented an intention while Actions represented a simple command to the motors and servos. So for instance, the Wander state represents the robot going forward to explore an open space, and the associated action is simply GoForward, which turns both motors forward.

The stopfunction for a state, by examining the Wrapper object, returned the next state to transition to. For instance, if upon querying the wrapper it found both bump sensors to be pressed, it would return the "Stuck" state. The stopfunction returns 0 if the robot should not transition yet.

The run method in State (ex. Wander) would loop the Action corresponding to the state (ex. GoForward), calling stopfunction in each loop to see if it should transition. If so, the state machine transitions to the next state.

States and Transitions

Our strategy was to

- collect balls in the first 2 minutes of the game, and

- find a yellow wall to score in the last minute.

If Hermes didn't see any balls, but saw the cyan button, she would go for that, and if he didn't find a yellow wall but time was short and she saw the purple pyramid, he would go for that. (Hermes would only go for the button again after 20 seconds.)

Hermes's basic Actions consisted of the following (which are self-explanatory)

- GoForward

- MaxForward

- GoBack

- TurnLeft

- TurnRight

- ForwardToTarget (e.g. ball, button, wall, or pyramid)

- DoNothing

- ReleaseBalls

Hermes's instincts are to

- turn around to look for balls and other targets,

- if she saw an appropriate target, he would go for it, and

- if she was stuck, back up.

It is not difficult to turn these into a list of States (associated actions in parentheses):

- Wander (GoForward)

- AvoidWall (TurnLeft or TurnRight) - try to swerve away from a wall

- TurnAndLook (TurnLeft of TurnRight)

- ApproachBall (ForwardToTarget)

- ApproachButton (ForwardToTarget)

- ApproachWall (ForwardToTarget)

- ApproachPyramid (ForwardToTarget)

- Stuck (GoBack)

- Charge (MaxForward) - charge towards a target

- Pause (DoNothing) - once she has a target centered, he pauses to allow the vision system to process the location of the target

- Stop (DoNothing) - automatically triggered after 3 minutes.

- Score (ReleaseBalls) - open the hopper door and close it several times, to shake the balls out

Finding the right transitions took trial and error. Each state has a list of transitions, with some taking higher priority than others. I'll describe a typical example below, and refer the reader to the complete diagram below for the rest of the states.

Stuck detection was essential. It would not do for Hermes to get stuck in any particular state, so I instituted a timeout for each state. For most states, Hermes would query wrapper.stuck() which would return (x,y) where 0<=x,y<=3, and x, y represent how stuck the left and right side are, respectively, from the readings of the 2 IR sensors and the bump sensor (bump sensor pressed representing maximum stuckness). If a side IR sensor sensed a wall within 4 inches, it added 1 stuckness point to a max of 3.

- Wander: In order of priority,

- If stuckness is >=2 on either side, Stuck!

- If Hermes sees a target, TurnAndLook to center it.

- If stuckness is >=1 on either side, AvoidWall (swerve before she actually gets stuck).

- If 5 seconds have passed, TurnAndLook

- Else, keep wandering.

Some special notes on the states:

- the TurnAndLook state: Hermes remembers IR values as he turns. If she turns a certain amount and doesn't see a ball, then he turns back until it finds approximately the minimum IR value and Wanders in that direction. This ensures that she goes towards an open space. If Hermes sees a target then he turns in that direction until it is centered (within 100 pixels of the centerline).

- the ApproachXXX state: It calls ForwardToTarget with the right target argument. ForwardToTarget uses a PID (proportional-integral-derivative) controller with constants

- K_p=.8 motor speed/degree

- K_i=.1 motor speed/degree*s

- K_d=.1 motor speed*s/degree

VisionSystem provides the coordinates of the center of the target. When the target was close (i.e. its y-coordinate was low), Hermes would forget about PID and just Charge.

- Charge: When charging the wall or pyramid, Hermes needed to know when it was aligned so it could release balls. To make up for possible malfunctioning of bump sensors (they tended to get banged around), I had multiple criteria. For instance, it would release when both bump sensors were pressed, or one was pressed and 5 seconds had passed, or IR values were within a certain range.

At first the targets were set to the right-color ball. After 2 minutes were up, a timer automatically changed the target to the goals. If Hermes scored successfully, the target was changed again to balls.

Vision System (David)

- For the Vision System, we decided to use OpenCV, which does provide some useful features and filters to filter for colors and determine where and object of a particular color is in a frame. The Vision System was coded in Python. Despite the slowness disadvantage, Python proved the easiest to work and debug with.

- The Vision System started out as a matched filtering system where it was given a template image (of a size significantly smaller than the actual received images) and attempted to find that image within the image taken from the camera. This proved to be too slow and did not provide enough coverage of variances in the ball target image (due to variable lighting conditions). In turn, we turned to using other color filtering techniques.

- When doing any form of color filtering it is first good to down-sample the image so that the image is represented by a smaller matrix and image processing takes less time. Then you should blur the image using some form of blurring filter. We decided to use a Gaussian blur instead of a simple averaging filter of surrounding pixels because it experimentally produced better results. The reason for blurring is to remove color noise in the image since neighboring pixels of a particular pixel are likely to be of the same color but skewed a bit due to lighting. We also decided to use the HSV color scheme because it proved easier to set up a filtering system for colors using an established hue range (that for the most part is static) and then adjust just the value and saturation values to compensate for lighting. OpenCV has many useful functions for determining if a pixel meets some criteria. I recommend exploring the OpenCV API document. Despite the documentation not being that good, it is a good place to start researching.

- Our vision system uses a concept of profiling so as to establish a color profile (a list of HSV ranges) for each of the desired targets. This was easy to fine tune using a GUI we developed to edit these color profiles while filtering and scanning an image for targets (so that we could see the results of the change immediately). Our Vision System also uses shape detection in that after an image is scanned for pixels that meet the color criteria, the matched section is then subjected to a shape test. If the matched section passed the immense scrutiny then its location relative to the center of the image was logged. To deal with multiple targets we only logged the location of the closest target which was determined by how low in the frame the target was, the lower the target was (the has the y index) the closer it was. Logging and target selection was done via keyword (strings) and dictionaries.

- The Vision System was placed into its own process because Python Threading is a pain due to the nonzero probability that one thread will continually grab the GIL and starve other threads ( the solution is to spawn more interpreters via processes). If the other threads cannot run, particularly the state machine thread, then the robot will ram into walls continually and not respond to stimuli from the sensors. Thus, the fewer the threads the better. However, threading is NECESSARY to concurrently capture sensor data and operate the State Machine. We were able to communicate with the Vision System Process from the main process via a command queue that the main process would add commands to and from which the Vision System Process would continually extract commands. Then once the command was executed the Vision System Process would place the result (if there was one) in a data queue, from which the main process could extract the data. We got the best results using queues for process communication.

- Preliminary API Document for VisionSystem.py:

- DEBUG_VISION-set this equal to 1 to print out important debug information if there is an error.

- VisionSystemWrapper:

- This is the main object used for communicating with the VisionSystem object via queues.

- All methods here correspond to VisionSystem methods of the same name

- VisionSystemApp:

- This is the main GUI object used for fine tuning the color profiles of each of the targets. This is run in its own process and spawns the VisionSystem object in a thread in that process (this is required so that the GUI does not lock up).

- VisionSystem:

- This is the Vision System object that handles all image processing, target detection, and edge detection.

- Main Data Storage Devices for VisionSystem:

- self.targetColorProfiles-color profiles for target detection, list of ranges of HSV values

- self.targetShapeProfiles-dictionary that points to functions to verify the shape of the specific target (the target is the key)

- self.targetLocations-dictionary that keeps track of all the target data ascertained by the Vision System.

- self.bestTargetOverall-holds the target data of the closest target

- self.detectionThreshold-used to set the area threshold for detecting valid targets

- Main Functions for VisionSystem:

- def activate(self)-must be called after instantiation of VS object to turn on the VS

- def addTarget(self,targetStr)-Add a target to be tracked by the VS

- def removeTarget(self,targetStr)-remove a tracked target from VS

- def getTargetDistFromCenter(self,*targetList)-Returns (x,y) dist of target center from center of image, @param target-string or list representing the target(s) for which you want to know the distance from the center, must be "all" or must be present as a key in the self.targetColorProfiles dictionary

- def isClose(self,*targetList)-Returns true if the closest target of the tracked targets is close

- def explore(self,image)-Main function that calls each of the individual processing modules.

- def findTargets(self,initialProcessedImage)- Find Targets Processing Module, takes in an image and will locate the targets speicifed in the target list according to the target color profiles and the area thresholds.

- def findTarget(self,processedImage,target,ignoreRegions=None)-Called by findTargets() to find a particular target.

- def stop(self)-stops the Vision System

NOTE: THIS SOFTWARE HAS A MEMORY LEAK! OpenCV Images will start to accumulate in physical memory. After a period of time depending on how much RAM your computer has, the system will no longer function and will spawn an error indicating that a memory allocation failed! In our tests we were able to last 3-4 minutes. If you have more RAM on your computer you can last longer. Please plan accordingly!

Debugging

Python allocates time to its threads HORRIBLY. At the beginning we ran the state machine, sensor modules, and VisionSystem in separate threads. However, this meant that our state machine updated very slowly---once per second---(even with time.sleep's) so we made two fixes:

- Changed VisionSystem to a Process.

- De-threaded all the sensors.

After this Hermes responded much faster.

Overall performance

Hermes stuffed hermself with balls, and sometimes banged the button to get more balls. During seeding, (s)he was the most energetic of all the robots, ramming into walls and turning the pyramid around for several revolutions.

After seeding, Hermes grew out of her "bumping-into-walls" phase, and learned to score. We got 70 points in our match against the Mengineers, but as they were the team that eventually won the competition by scoring into the top level of the pyramid, we were eliminated.

- 60 points from collecting 4 balls and depositing them into the purple pyramid, and

- 10 points from collecting 2 more balls.

Conclusions/suggestions for future teams

Roxana:

- Organize your electronics FROM THE START. This is compulsory. Weak connections caused our robot to not really be able to run our very first match (Right motor wires were not in motor controller all the way).

- Try to make stuff/prototypes early. Anything. Make Anything Early.

- MAYBE HAVE A MECHE ON YOUR TEAM?? Although I can't say it went badly. If you've never done this stuff before, keep it all really simple. It was awesome. Even if you think you might fail at stuff, keep going. Ask for help.

- Huge thanks to all the Maslab Staff (IHOP ANYONE???), Mark B. (and the rest of Edgerton staff), Ron W. (of CSAIL), and Richard B. (and the rest of the CMS) for all the super mega awesome help. Each of you should get to choose between Donuts and Chocolate.

- Super Mega Foxy Awesome Hot Teams:

- Thanks to HexnetLLC (team 1) ([2]) for laser cutting our stuff the first two times and being generally wonderfully supportive and helpful.

- Thanks to Janky Woebot (team 7) ([3]) for keeping us oddly reassured and relaxed (whenever I soldered something, Melody was always laughing with you guys ^_^). Your robot's helix moved so fast...almost as fast as my heart fell for it.

- And no less thanks to all the other teams for being friendly all through this "competition".

Melody:

- Split up the work at the beginning! Like, 2 builders, 2 programmers. Also, make a schedule and try to keep to it, so that you accomplish a bit of something every day.

- Get Edgerton access. For almost 3 weeks, we only had one person on our team who had Edgerton access, which made things super duper slow, on the building side. But there were no other training sessions. I ended up just talking to Mark (Edgerton manager) and he let me work there, even though I wasn't officially trained.

- Find someone with lasercutting access. It'll be useful when your acrylic pieces break...

- Try to finish a CAD model of your robot as early as possible.

- Also, if you don't know what to do, just ask the TAs. For us, especially at the beginning, we felt super lost and were a bit intimidated by them. But they're super helpful and really nice people, and we got to know some of them pretty well.

- FINALIZE YOUR STRATEGY. Our team somehow didn't decide that we only wanted to score over the wall / lower level of pyramid until the very, very end, which made building very stressful. Know what you want to do. Also, even if you don't have mechanical ability, don't be afraid to aim high (literally, in our case). You'll figure things out! Also, mechEs on other teams are especially nice and helpful when you have questions.

Holden:

- Don't use threads in python. Our robot ran slowly because of threading, and we didn't fix it until 2 hours before competition. (Python threading is badly implemented because of the global interpreter lock.)

- Start with simple architecture. A state machine worked well. A good state machine is better will do better than a bad state machine with fancy vision/mapping algorithms. The basic stuff works takes priority over anything fancy! Although we only had a simple state machine working, we did a lot better than some teams who tried mapping and wall following.

- Spend time developing robust stuck detection. One of the biggest stumbling blocks for teams during the final competition was robots getting stuck and whiling away 1 minute or the rest of the 3 minutes. (Our robot spent 30 seconds in a turn right-turn left-go forward-back up loop---it was stuck at a corner of the field. We hadn't forseen this because this was a loop of different states. More testing could have uncovered these kinds of loops.) Even for a slow robot, it doesn't take long for it to center on a ball and approach it: 3 minutes is a lot, but not if your robot gets stuck.

- Divide up work well. It's better to divide up work somewhat arbitrarily, than to waste time thinking how to divide up work.

- Do not use Github unless you know what you are doing. During our project, Github managed to...

- Erase an entire day of code (detached heads...),

- auto-merge conflicting files by mashing two different classes together, and repeating a class within the same file.

Personally, I recommend using Dropbox as it has easy interface and keeps previous versions.

David:

- Generally, it is good to have a modular design with simple functions that are put together to produce complex behavior rather than having one convoluted and difficult to understand function.

- Avoid Threading in Python and use processes instead or use a different language like Java.

- Test as much as you can!

- When debugging a quick route to fixing the issue is to use print statements to determine where your program is getting stuck.

- Good luck!